Sound Computing and Sensor Technology

We were tasked with understanding audio in VR. Our first idea was to make audio in VR more immersive. My responsibility started with me appointed as the scrum master. In this case this involved managing the team, knowing their tasks, and making sure the project moves along.

During a discussion we had to figure out what we wanted to test, and what we wanted to design. I came up with the Idea of an auditory museum where your task is to distinguish different elements of sound. This was also what we ended up deciding on.

But after our status seminar, where we got some feedback on our current idea, it was decided that the project fitted better into the category of learning. This didn't change the project much. Because the decision was made based on my idea.

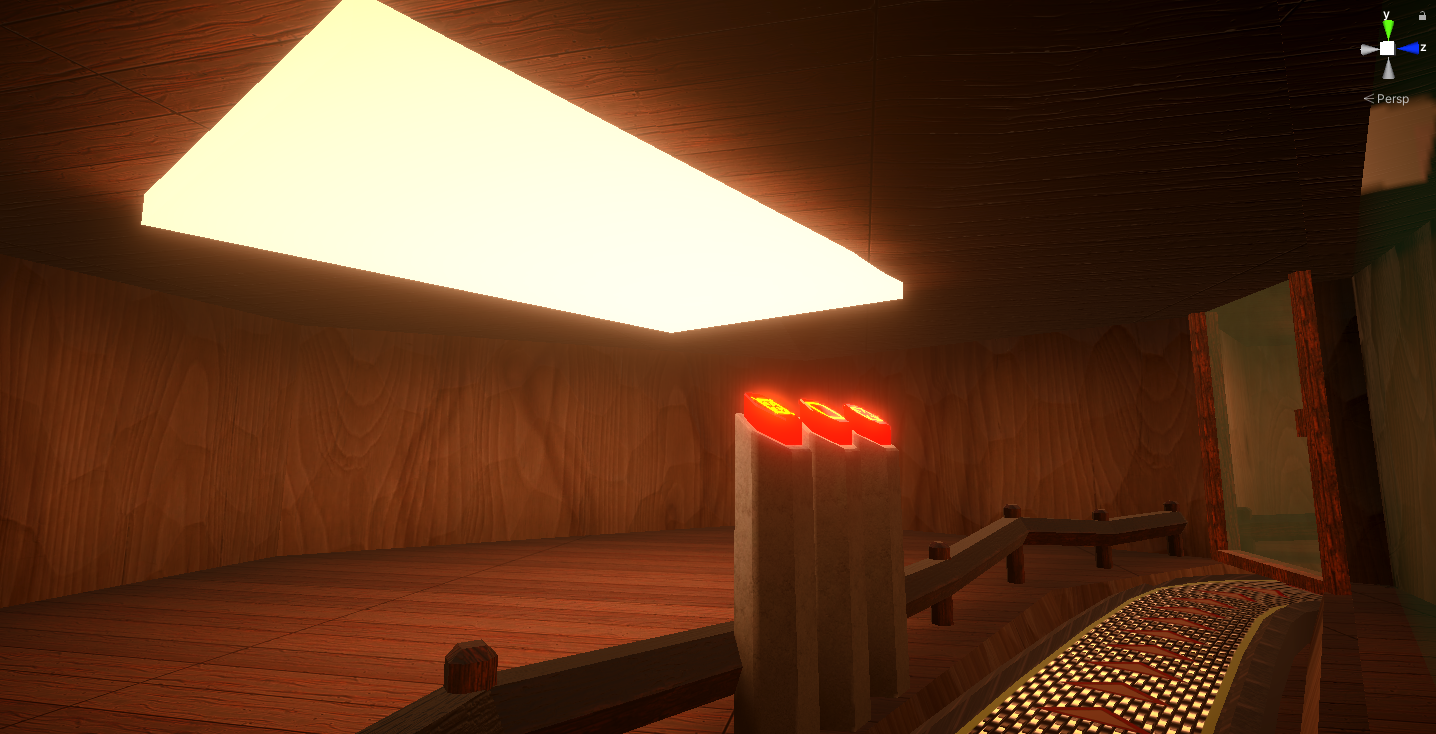

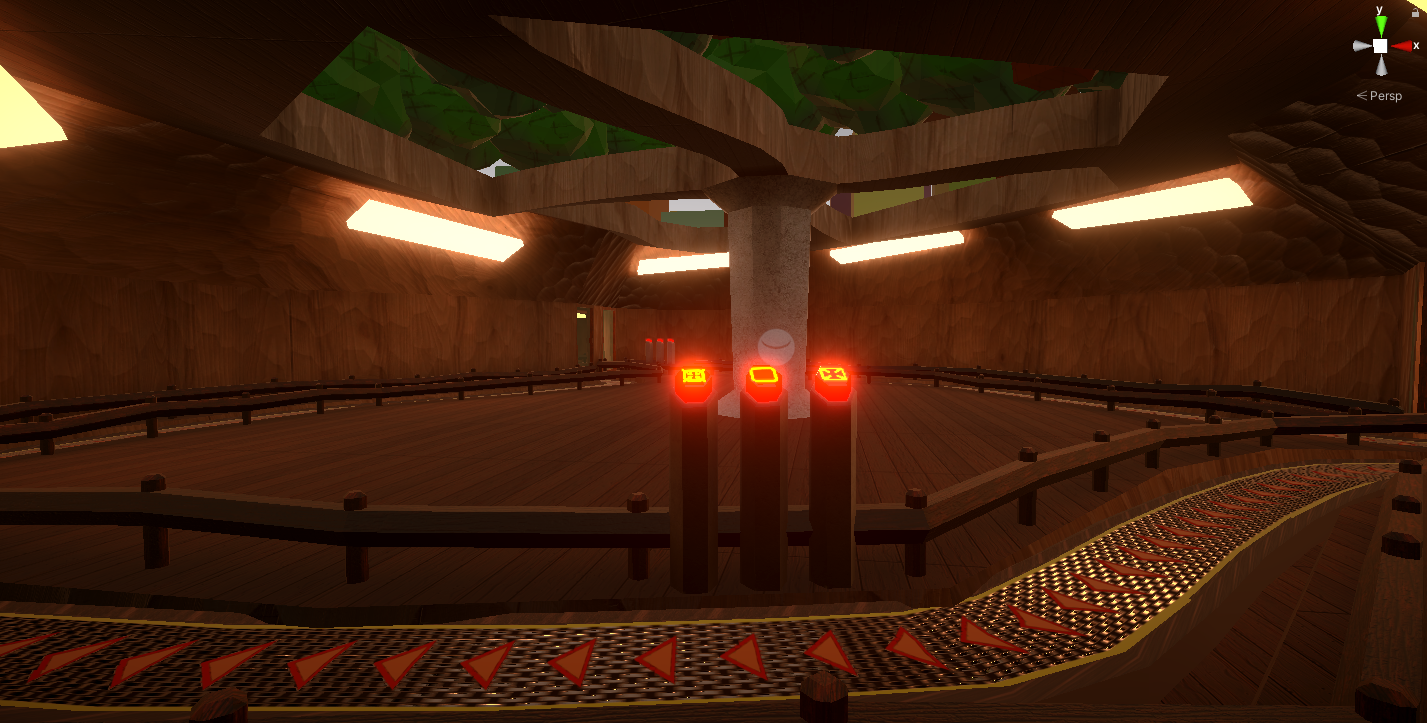

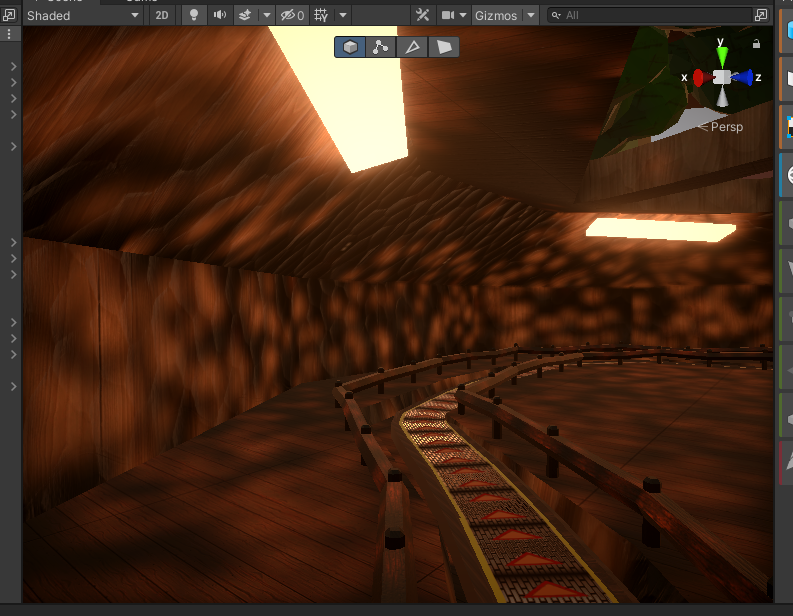

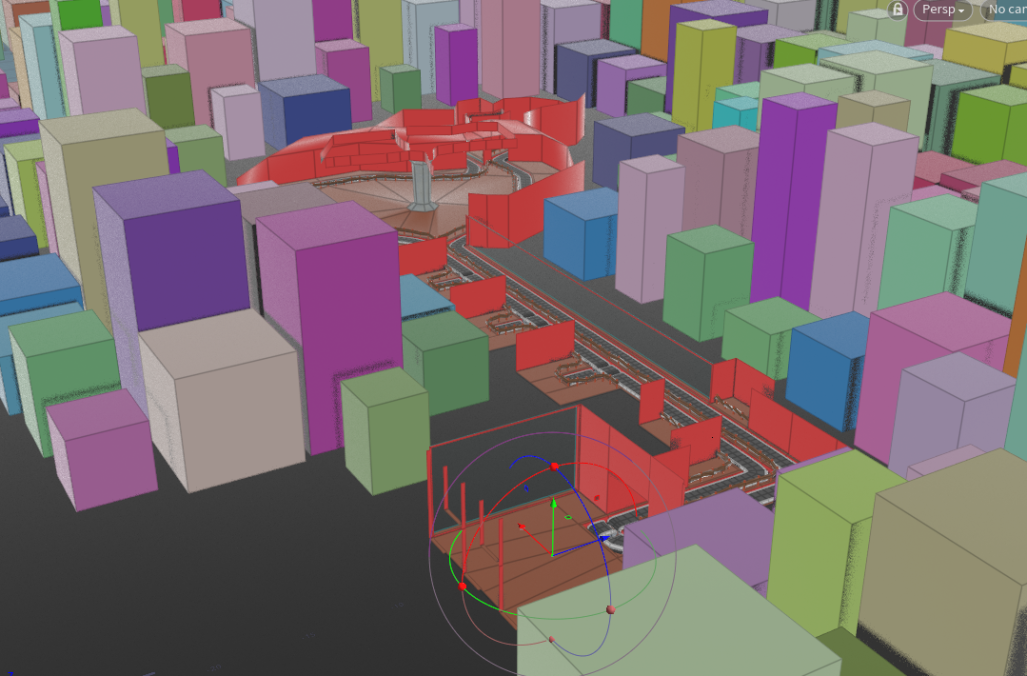

I was now tasked with blocking out the level design. I used ProBuilder within Unity for this, as to get a scale of our model. I also checked at this stage how it looked within VR. When everyone agreed, I went on to detailing. At this stage I had to figure out a way for me to implement the conveyor system. Both modelling, texturing and programming. I started by detailing the block-out in blender. To clean up the model for procedural use. I then went on to use Houdini for the rest. This allowed me to make the rest of the model procedurally, which also meant that small changes could still be made to the original model without much hesitation.

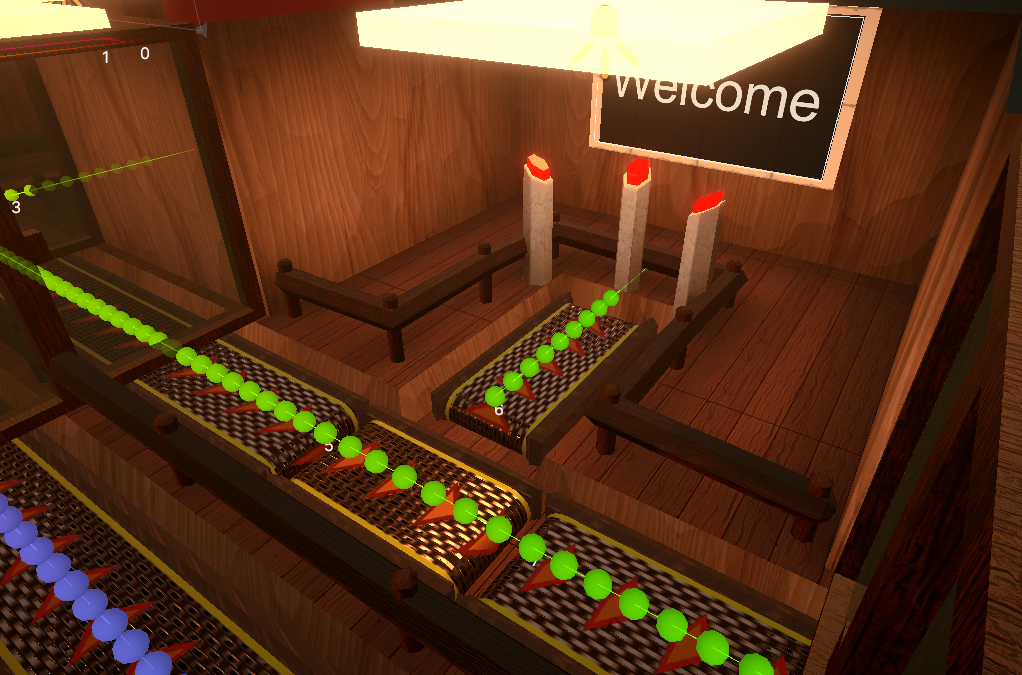

The conveyor was probably the most difficult part of this. Because not only did it need to wrap around corners, it also needed to unwrap correctly to make smooth animations possible just by moving the UV offset, as well as the ability to retarget/teleport to different conveyor belts. Alas I made it, and went back to Unity with the model.

In Unity I now had to figure out how to move around the Spline which is the conveyor. The spline part is traditional interpolation. However I needed the spline from somewhere. I came up with 3 different solutions. I ended up deciding on using the curve from Houdini and exporting it as an .obj. I then figured out that Unity didn't define splines in model files. So I changed the file name to .spline, and made an importer for the spline. With the spline at hand. I created some tooling for the curves. Now they needed the implementation. I knew I wanted to be able to move from different curves to other curves. and that I wanted to act as one long curve. Even though, part of it can go into other sections. The tooling I made for it helped me a lot. And it ended up as simple as interpolation between lines, and directions. The materials like wood, and concrete were made by Substance. However few exceptions apply - I used illustrator to draw icons for different buttons. I also used Substance Designer to procedurally create the Conveyor Belt substance.

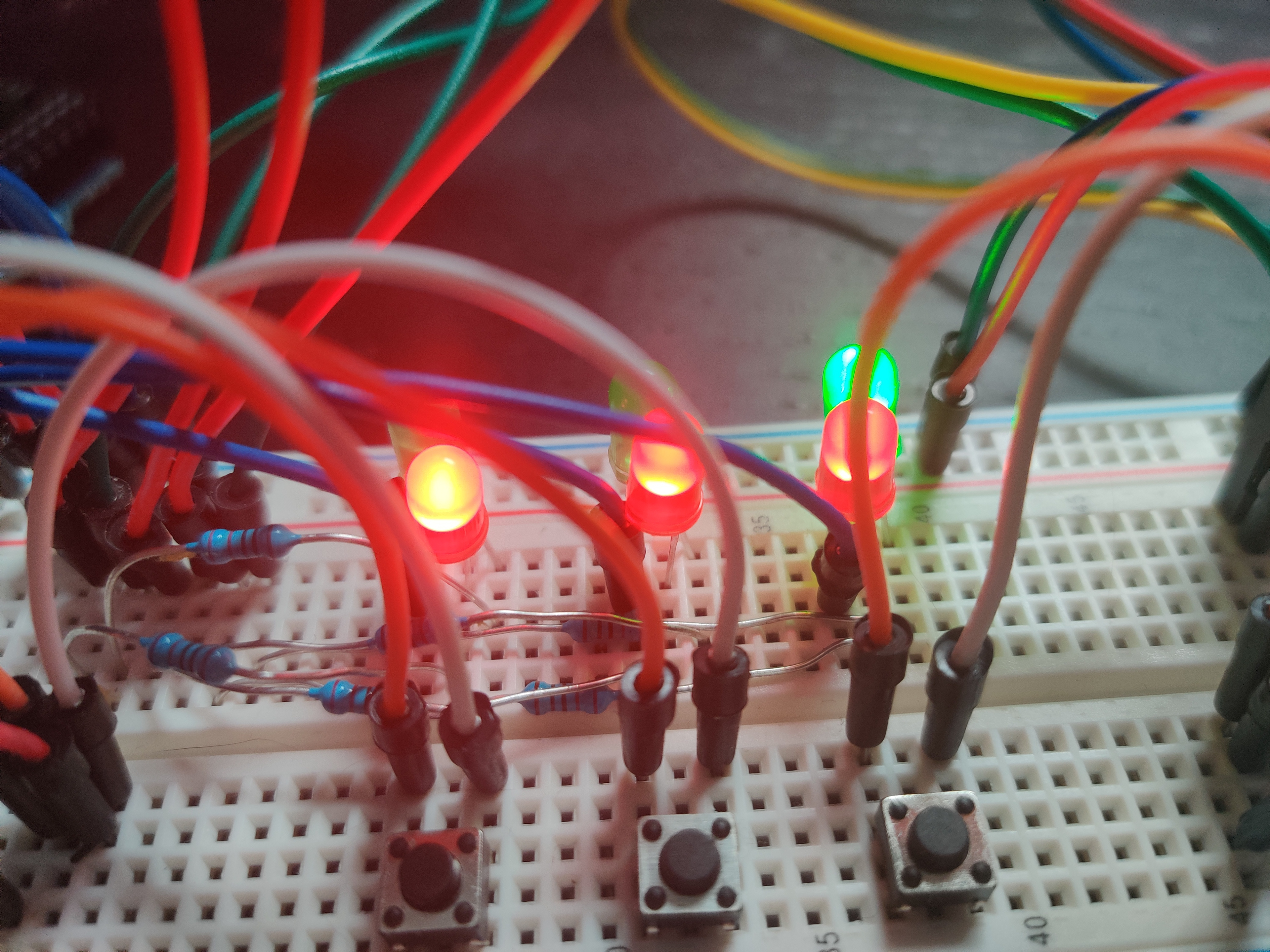

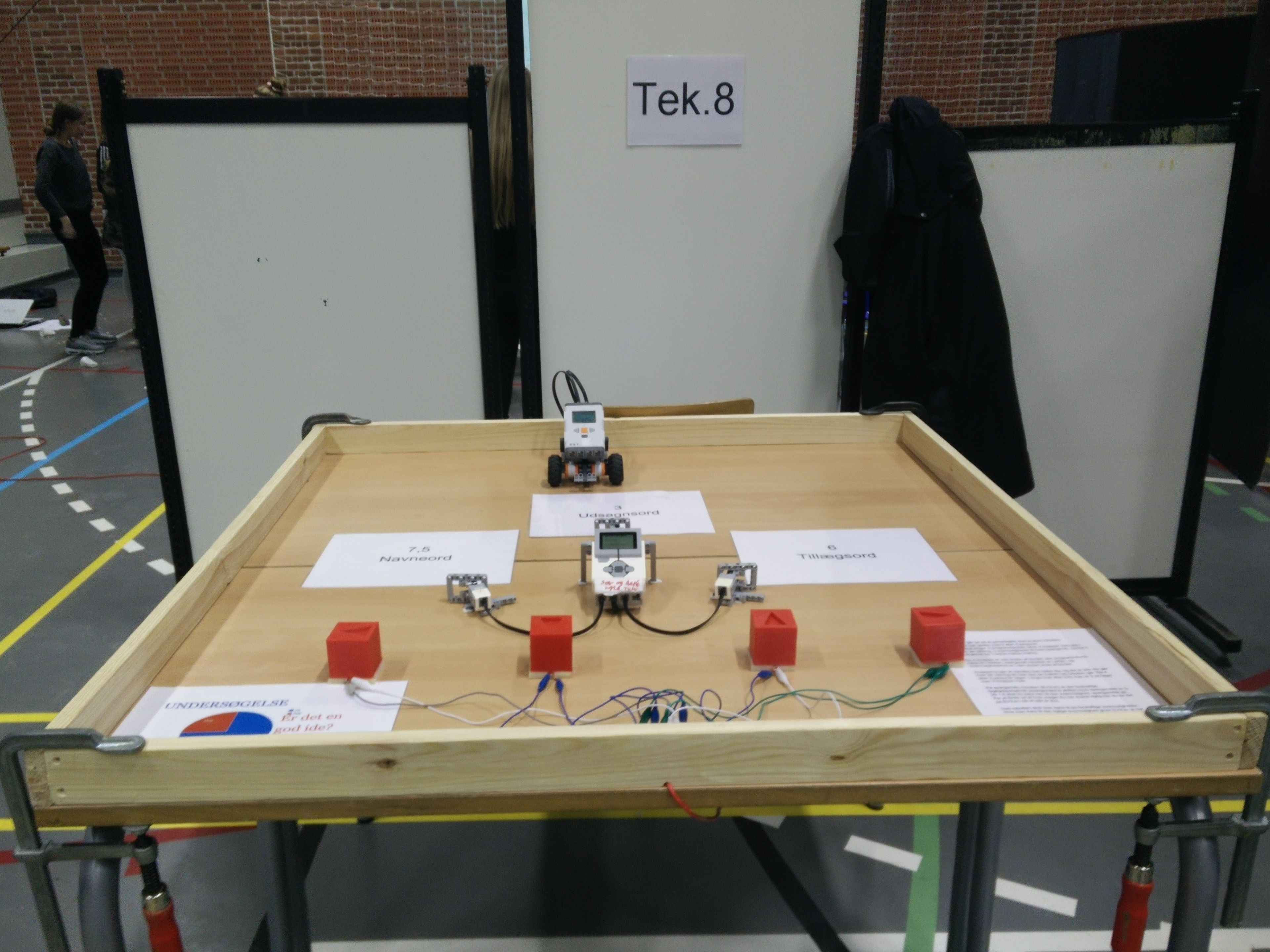

I then went on to the actual game. Which is basically four different mini-games. Those aren't really that interesting. Other than them having commonalities that could be shared between them. But our project also had to include a physical artifact as well. Which I was also in charge of designing and implementing. There were two parts to this. One acted as a controller showing states. as well as a secondary one with a gyroscope.

I programmed it to interact with Unity - Actually testing the feasibility of communicating from Arduino to Unity was something I programmed at the very beginning. Meaning this was easy as pie. With only very minor difficulties, easily solved by persistency and curiosity.

The project also needed to manipulate sound. We had three options for this in Unity. OnAudioFilterRead, a Native Unity Plugin, or the new DSPGraph. Initially I knew none of those options. However I am very into DOTS, and passionate about learning all aspects of it!.. There are very few talks by the DOTS Team I haven't watched at this point. So that was the obvious way to go. However there was one problem with this. A huge lack in documentation. But after a little determination from me. I figure out how to install it, and looking at Unity's provided examples. I was able to figure out the implementation of some aspects of it. But the samples didn't cover everything I needed, so I ended up reading the source code every time I needed to know how to do stuff. What better way to learn than to just read how they wrote DSPGraph - Which is one of the things I absolutely adore about DOTS, the ability to read how it actually works underneath, written in a way that require very little knowledge of knowing all state in the program, which just makes it even easier to read :)

Anyways at this point I am just praising DOTS, that was not my intend, even though it's well deserved :P. So what did I implement using DSPGraph? Well, to put it in a data-oriented way. I wanted an input of Audioclips, room size, and absorption material, to be transformed into a sound signal where you could hear the difference in material, and room size. After all data-oriented design is all about knowing your data. The implementation detail includes making, mixer kernels, comb filter kernels, allpass filter kernels, adjusting the sample audioplayer kernel to this projects needs. and setting it up in a way that is Shrouder's reverb compliant.

You might at this point be wondering what the other group members do? - Well, they're responsible for research and writing, and most importantly they keep me grounded. As I can sometimes be a bit ambitious :)

The Drawing I Drew of My Idea

First Block Out

Getting an Idea of Detailing

More Detailing, Surrounding Areas and Procedural Conveyor

Tooling In Unity For Conveyors

Main Problem Area for Conveyors

Final Artifacts Which I Designed and Implemented.